If you haven’t played around with Chat GPT3 yet, you should skip reading the rest of this newsletter and just try it out for yourself.

Chat GPT3 is a Large Language Model (LLM) that can generate text output in response to questions or prompts.

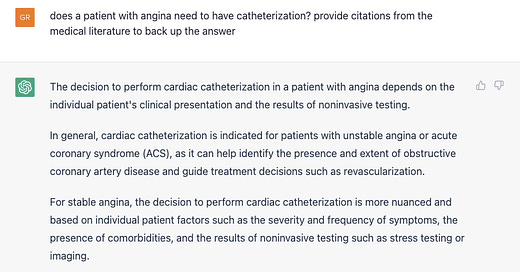

It’s fascinating to use - I asked ChatGPT3 about doing a cardiac catheterization for a patient with chest pain and it instantly provided me with a nuanced, correct (albeit superficial) answer to the question:

We can quibble with the literature that it cited - I personally would have referenced the ISCHEMIA trial and the meta-analysis mentioned is from 2012 - but that output is shockingly high quality, probably at the level of an early internal medicine resident.

It’s certainly way better than what I was expecting, and it’s probably similar in quality to how many non-cardiologists might answer.

And now some researchers have shown that Chat GPT3 can pass all 3 steps of the United States Medical Licensing Exam (USMLE).

Passing the medical boards is not easy… this is pretty impressive

The USMLE exams are really hard!

When I was in med school, most of us spent 6 weeks studying for Step 1 of the USMLE.

That’s 6 weeks of studying full time (8-12 hours per day). A step 1 score used to be a huge determinant (before it became pass/fail) of what type of residency you could get into.

And now AI can pass it without any specialized training.

This is not the type of test that asks things that are easy to look up - these are multistep questions that require several layers of reasoning to answer correctly.

The questions often require you to make a diagnosis, know the right treatment, and then understand the side effects of that treatment.

And Chat GPT3 doesn’t just answer the questions correctly, it can provide reasoning that can aid in the educational process:

“These data indicate that a target human learner (e.g., such as a second-year medical student preparing for Step 1), if answering incorrectly, is likely to gain new or remedial insight from the ChatGPT AI output. Conversely, a human learner, if answering correctly, is less likely, but still able to access additional insight.”

But just because this is impressive doesn’t mean it’s ready for prime time

One of the biggest problems with this technology is that it’s wrong too often and that the incorrect outputs are delivered with the same level of confidence as the correct ones.

In this way, Chat GPT3 is pretty similar to humans.

But in a world where medical errors may cause as many as 100,000 deaths a year, an automated decision maker that can hallucinate answers isn’t going to fix medical errors.

And also, there’s a big difference between being able to pass a standardized test and to deliver good quality patient care - just ask any physician how confident they felt about practicing independently after passing all 3 steps of the USMLE.

Even when you include all of the necessary caveats, it’s still amazingly exciting technology that has real potential to improve healthcare.

A focus on diagnosis is the wrong place to add AI to healthcare

I’m not suggesting that doctors are infallible - we make mistakes all the time, and we certainly get a lot of diagnoses wrong.

It would be great to have an AI tool that gets this stuff right more than I do so that my patients get better answers about what’s going on with their health.

But I think that the focus on diagnostic capabilities or visual fields like radiology and dermatology is going to have a minimal impact on most healthcare delivery in America.

That’s because making a diagnosis is rarely the rate limiting step in care delivery.

Where’s the AI tool to do a medication reconciliation for our complicated patients who get care in multiple health systems and have prescriptions at a bunch of pharmacies?

Where’s the AI tool that can refill a medication in less than a dozen clicks and 5 pauses of several seconds toggling between screens?

Where’s the AI tool that generate an automated printout for our patients about the purposes of their medications, the important side effects to watch out for, and the potential interactions so that they don’t need to call the office 10 minutes after their appointment ended to go over these questions?

Where’s the AI tool that can remind a patient who is prescribed a diuretic that they need to get their bloodwork done in a week to see if their potassium is too low?

Where’s the AI tool to describe the names and purposes of the tests that we order during a visit so the patient doesn’t leave confused about where they need to go and why?

In other words, while I would certainly welcome an AI tool that saved me from an incorrect diagnosis, that’s not the area that will move the needle for most healthcare delivery.

AI deployed in healthcare promises to entrench habits of overprescribing and over-medicalizing

Do you think an AI tool that’s developed by learning from contemporary medical practices is going to prescribe medications with a light touch?

No way - doctors are trained that we have medications and procedures to help patients with symptoms or testing abnormalities.

An AI model that’s learned about the solutions is going to prescribe more medications and recommend more surgeries.

Think about the big money that’s at stake in crafting research to manipulate an AI physician about the right treatment - the Big Pharma arms race to generate data to persuade the AI that their drug is the best for X-Y-Z condition is going to make the current landscape of mostly-useless observational research that generates media clickbait feel quaint.

A widely deployed AI that scrutinizes the medical research to come up with a diagnosis and treatment plan has serious dystopian potential.

We’re not talking end-the-world or kill-us-all potential, at least not yet.

But we are looking at a lot of potential for suboptimal medical care based on low quality data - like we all end up on atenolol for hypertension and simvastatin for lipid lowering.

Just as easy access to medical information has created an arms race of insipid, generic information, the widespread deployment of AI in medicine has significant potential to be manipulated to make big money for big business by providing suboptimal care for the masses.

The other big concern I have with AI in medicine - worsening gaps in health equity

The utopian theory of an AI doctor in everyone’s pocket democratizing access to healthcare and helping to overcome health disparities is the best case scenario, but it’s pretty unlikely.

And while maybe I’m motivated by a concern that I’m about to lose my job, I think that you can’t underestimate the potential that an AI doctor makes healthcare worse for folks who are poor while the rich can afford a human:

And so poor people end up on an SSRI when their heart rate variability decreases or another antihypertensive drug after a few bad nights of sleep while wealthy people have access to a human doctor who isn’t beholden to the same rules as the large language model:

The most likely outcome here: AI won’t become your doctor, but your doctor will be supervising an AI

Ultimately, this is nascent technology that has the potential to disrupt almost every single industry that exists.

And based on what I’ve seen, physician organizations are going to be successful in lobbying to create rules so that AI augments our work rather than replaces us:

The AMA has been working on codes for AI assisted work. As with many industries, entrenched interests are likely to prevail.

Healthcare is unique in that a high profile mistake can torpedo an exciting potential technology.

Just think about what happened with gene therapy after Jesse Gelsinger - a decades-long winter for a potentially game-changing technology after one catastrophic outcome that got a lot of media attention.

So this is promising technology that is barely in its infancy. And while you should be paying attention to how it starts to be deployed in healthcare, you should also be skeptical that AI does anything to move the needle on health outcomes.